The Shifting Privacy Left Podcast

Shifting Privacy Left features lively discussions on the need for organizations to embed privacy by design into the UX/UI, architecture, engineering / DevOps and the overall product development processes BEFORE code or products are ever shipped. Each Tuesday, we publish a new episode that features interviews with privacy engineers, technologists, researchers, ethicists, innovators, market makers, and industry thought leaders. We dive deeply into this subject and unpack the exciting elements of emerging technologies and tech stacks that are driving privacy innovation; strategies and tactics that win trust; privacy pitfalls to avoid; privacy tech issues ripped from the headlines; and other juicy topics of interest.

The Shifting Privacy Left Podcast

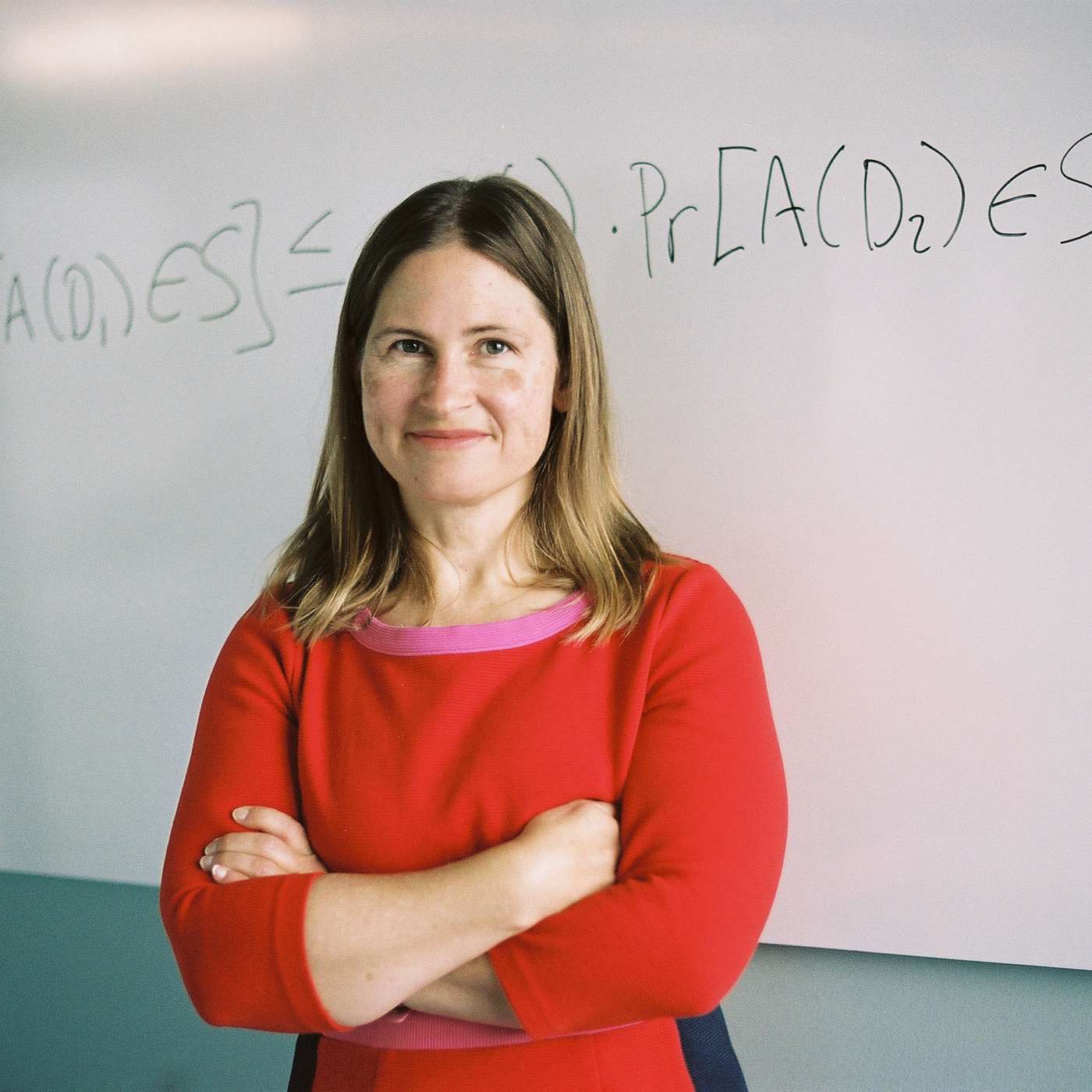

S2E32: "Privacy Red Teams, Protecting People & 23andme's Data Leak" with Rebecca Balebako (Balebako Privacy Engineer)

This week’s guest is Rebecca Balebako, Founder and Principal Consultant at Balebako Privacy Engineer, where she enables data-driven organizations to build the privacy features that their customers love. In our conversation, we discuss all things privacy red teaming, including: how to disambiguate adversarial privacy tests from other software development tests; the importance of privacy-by-infrastructure; why privacy maturity influences the benefits received from investing in privacy red teaming; and why any database that identifies vulnerable populations should consider adversarial privacy as a form of protection.

We also discuss the 23andMe security incident that took place in October 2023 and affected over 1 mil Ashkenazi Jews (a genealogical ethnic group). Rebecca brings to light how Privacy Red Teaming and privacy threat modeling may have prevented this incident. As we wrap up the episode, Rebecca gives her advice to Engineering Managers looking to set up a Privacy Red Team and shares key resources.

Topics Covered:

- How Rebecca switched from software development to a focus on privacy & adversarial privacy testing

- What motivated Debra to shift left from her legal training to privacy engineering

- What 'adversarial privacy tests' are; why they're important; and how they differ from other software development tests

- Defining 'Privacy Red Teams' (a type of adversarial privacy test) & what differentiates them from 'Security Red Teams'

- Why Privacy Red Teams are best for orgs with mature privacy programs

- The 3 steps for conducting a Privacy Red Team attack

- How a Red Team differs from other privacy tests like conducting a vulnerability analysis or managing a bug bounty program

- How 23andme's recent data leak, affecting 1 mil Ashkanazi Jews, may have been avoided via Privacy Red Team testing

- How BigTech companies are staffing up their Privacy Red Teams

- Frugal ways for small and mid-sized organizations to approach adversarial privacy testing

- The future of Privacy Red Teaming and whether we should upskill security engineers or train privacy engineers on adversarial testing

- Advice for Engineer Managers who seek to set up a Privacy Red Team for the first time

- Rebecca's Red Teaming resources for the audience

Resources Mentioned:

- Listen to: "S1E7: Privacy Engineers: The Next Generation" with Lorrie Cranor (CMU)

- Review Rebecca's Red Teaming Resources

Guest Info:

- Connect with Rebecca on LinkedIn

- Visit Balebako Privacy Engineer's website

Privacy assurance at the speed of product development. Get instant visibility w/ privacy code scans.

Shifting Privacy Left Media

Where privacy engineers gather, share, & learn

Disclaimer: This post contains affiliate links. If you make a purchase, I may receive a commission at no extra cost to you.

Copyright © 2022 - 2024 Principled LLC. All rights reserved.

You really need to make sure that if your privacy red team finds something, that you have total leadership buy-in and they're going to make sure it gets fixed. I think that the politics, the funding of it are so crucial to a successful privacy red team.

Debra J Farber:Hello, I am Debra J Farber. Welcome to The Shifting Privacy Left podcast, where we talk about embedding privacy by design and default into the engineering function to prevent privacy harms to humans and to prevent dystopia. Each week, we'll bring you unique discussions with global privacy technologists and innovators working at the bleeding edge of privacy research and emerging technologies, standards, business models, and ecosystems.

Debra J Farber:Today, I'm delighted to welcome my next guest, Rebecca Balebako, founder of Balebako Privacy Engineer, where she enables data-driven organizations to build the privacy features that their customers love. She has over 15 years of experience in privacy engineering, research, and education, with a PhD in engineering and public policy from Carnegie Mellon University, a master's in software engineering from Harvard University as well, and her privacy research papers have been cited over 2,000 times. Rebecca previously worked for Google on privacy testing and also spent time at RAND Corporation doing nonpartisan analysis of privacy regulations. Rebecca has taught privacy engineering as adjunct faculty at Carnegie Mellon University, where she shared her knowledge and passion for privacy with the next generation of engineers. Today, we're going to be talking about all things privacy red teaming.

Rebecca Balebako:Thank you so much. I'm excited to be here.

Debra J Farber:I'm excited to have you here. Red teaming is definitely something that's near and dear to my heart as a privacy professional because my other half, he's a pentester, red teamer, works in bug bounty, and offensive security stuff. So, we're going to talk about privacy red teaming today, but there's tangents with security, so it's a topic that I'm excited about.

Rebecca Balebako:Yeah, me too.

Debra J Farber:So why don't we kick it off where you tell us a little bit about yourself; and as an engineer, how did you get interested in privacy, and what led you to found Balebako Privacy Engineer?

Rebecca Balebako:I was a software engineer for about a decade and I really got to the point where just coding wasn't cutting it for me. I wanted to work on something that was more at the intersection of policy or humanities and really delved into what society wants and needs. It was at this point I discovered some of the work that Lorrie Cranor - Professor Lorrie Cranor - at Carnegie Mellon was doing on Usable Privacy; and, from there, the PhD program with her as my advisor. So, if you aren't familiar with her, there's a previous podcast, Debra, that you've done with her, so listeners can go back and listen to Lorrie Cranor's podcast. She's amazing, and ever since then, I've been doing privacy engineering work, really trying to find that intersection of engineering, regulation, technology, and society; and really felt that by creating my own company, I could have the most impact and work with a broad range of companies. So that's why I am where I am right now.

Debra J Farber:That's awesome. I love the blend of skills; and, like myself, just one area is not enough. You want to connect the dots across the entire market, the regulation, what's driving things forward in the industry.

Rebecca Balebako:That's right, because you have a background in law, correct? [Debra: Correct, yeah] But, you are now doing a podcast that's really about the technological aspects. How do we shift it left? How do we get it into the engineering aspects? What got you to do that shift?

Debra J Farber:Oh gosh. There's so many reasons. I could do a whole podcast episode on that. I think for me, it's I wanted to do more tactical work after law school and there weren't any - in 2005, there were very few, if any

Debra J Farber:privacy law focuses at law firms. It was more sectorally handled, and going into operationalizing privacy was really a great opportunity because businesses needed people to be hands on and like create processes and procedures and all that for privacy in their organizations, by law. So, they were just all too glad to have someone who was like interested in taking that on at the time, because this was so new. As the industry got more complex - it wasn't only about cookies and small aspects of privacy, then looking at how does personal data flow through organizations in a safe way and privacy- preserving way. Then, just through that process, I learned more about the software development lifecycle to round out my understanding of how systems get developed and product gets shipped; and so, I just got more and more fascinated with that. At one point - it totally off topic from red teaming here - I actually thought about "Oh, I really like, could I go back to school for electrical engineering? Because I was working in the telecommunications space for a little bit right before law school and I found it fascinating. I found out that I would have to go back and get a bachelor's degree again and spend another five years just on another bachelor's, and I just felt like too long after college and just before law school. It just felt like too long to get another bachelor's. So, I didn't go that direction.

Debra J Farber:But, my whole career ended up being a shift left into more technical.

Debra J Farber:I felt like laws weren't driving things fast enough. It wasn't actually curtailing the behavior of companies fast enough for me and for my brain. I could see where things are going and it took way longer than I wanted it to for the market to play out and go that direction. I found that working on the tech stuff, you could actually be working on cutting edge, but also help with the guard rails and make sure we're bringing it to market in an ethical way, rather than working on compliance - or governance, risk, compliance, legal. It's changing, but the way it's been has been very siloed, and I thought that what would really drive things forward is privacy technology, privacy enhancing technologies, and strategies for DevOps; and, you know it was obvious to me after just years of working in this space that that's really where we could make some immediate changes - that we don't have to wait for many years like legislation, which is important but is too slow for me.

Rebecca Balebako:Yeah, I mean you really speak to me there. I'm a big fan of privacy- by- infrastructure. Let's build it into the technology. Let's get it in the tech stack. Let's not just rely on compliance, but let's build it in, and then it can be replicated. I totally agree with you; there are a lot of benefits to shifting privacy left.

Debra J Farber:Yeah, and then I'm just a really curious person. So, I think that these deep dives into different areas of privacy technology on the show, for me, it's a treat to be able to ask all the questions I have. I'm just glad other people find those questions interesting. But, let's get back to you.

Debra J Farber:We're here today to talk about privacy red teaming, but first I want to get to the broader topic of adversarial privacy tests because I think that's an area that you focus work on in your work. Can you give us an overview of what 'adversarial privacy tests' are, why they're important, and how can we disambiguate that from some other tests for software development?

Rebecca Balebako:Yeah, absolutely. Thank you. Well, so 'privacy right teaming' is sort of the hot term right now, but it's just one type of adversarial privacy test. I think we're going to get more into the details of when privacy red team doesn't encompass all the types of adversary privacy tests, but basically they are tests where you're deliberately modeling a motivated adversary that is trying to get personal data from your organization. So really, the key things here that might differentiate it from other types of privacy tests are the adversary. It's not just a mistake in the system or it not being designed right. If there's a motivated adversary, there's personal data, and it's a test.

Rebecca Balebako:The reason I really want to emphasize test is because it's a way to test the system in a safe way. You are trying to attack your organization's privacy, but you're doing it in a way that will not actually cause harm to any users or any people in your data set. Yeah, so adversary. You're thinking through what is someone going to try and do that would be bad to the people who are in my data set and how are they going to do it with that data, and then you're actually trying to run that test and find out if it's possible. That's like a broad definition of adversarial privacy tests and I think if we keep 1) adversary, 2) personal data, and 3) test all in mind, it's going to help us differentiate it from a lot of other types of testing and privacy versus security and so on.

Debra J Farber:Yeah, that makes a lot of sense. I'm wondering, is an adversarial privacy test based on the output of a threat model that you're creating, like the threat actors and all that, or is that part of the adversarial privacy testing process - defining who the threat actors are and threat modeling?

Rebecca Balebako:I've seen it work both ways, where I've seen organizations who have the threat model already and then they realize, "Oh, we probably need to do some specific adversarial privacy testing." But it can also happen where people haven't really thought through their adversaries yet, specifically their privacy adversaries, and so the threat modeling becomes a part of, and process in, doing the privacy red teaming or the adversarial privacy test. It's part and parcel, but which one you realize you need to do first, kind of depends.

Debra J Farber:Okay, that makes sense. I would imagine that, since this is still a relatively new concept in organizations, that best practices in setting this up are still being determined.

Rebecca Balebako:Oh, yeah. Thanks for saying that because I think it's really important to say that. There are not standards and the various organizations that are doing privacy red teaming haven't really gotten together to define and document all of this. I mean, we're trying. We're trying to move forward and create these clear processes, but it's not an old field with a lot of standards and processes already.

Debra J Farber:Which also makes it an opportunity. [Rebecca: Exactly]. We talked about adversarial privacy tests. How would you define a 'privacy red team?'

Rebecca Balebako:So, a privacy red team is one particular type of adversarial privacy test; and with the privacy red team test, you're going to have: 1) a very specific scope; 2) a specific adversary; and 3) a goal in mind. A privacy red team will try to test your incident management, your defenses, and run through an entire scenario to hit a target. A red team attack should partially test your data breach playbook. It should partially test whether your incident management sees this potential attack going on. And also it's going to be different than a vulnerability scan. A vulnerability scan might try a whole bunch of different things whether or not it gets them to a specific goal; whereas a privacy red team, much like a security red team, you have a goal in mind and you want to do whatever it takes to get there. "Can I re identify the people in this data set? Can I figure out who the mayor of Boston is in this data set? You have this specific goal and so you try to get there.

Debra J Farber:That makes a lot of sense. You just mentioned how privacy red teams are similar to security. Red teams borrow the name from security. How are they different?

Rebecca Balebako:There is a fair amount of overlap between privacy red teams and security red teams. I think one of the main differences are. . . there are a couple of differences, but it's basically the motivation of the adversary might be different in a privacy threat model than in a security threat model. The adversary is specifically going to be looking at personal data and they're going to take advantage of features that are working as intended. We've seen this with some of the recent data breaches. Basically, features that are designed to share data, a privacy red team might take advantage of that; whereas the security red team might not consider that a vulnerability because it's working as intended. Of course, that really depends on the red team and their goals.

Rebecca Balebako:I say this with full respect for security experts because there are so many system vulnerabilities and patches and so on going on that I think it's really hard for security experts to also keep in mind the sliver of vulnerabilities that are specific to privacy. Or, at least, not all security experts have that training and similarly, not all privacy experts (I certainly don't) have that level of skill to recognize the latest security vulnerabilities and Windows patches that are needed. There's a slightly different skill set and also this difference between the motivation and aiming for personal data and features that are working as intended.

Debra J Farber:I want to underscore something that you just said, and that's privacy red teaming is about personal data specifically. That brings up a bunch of things that I know you've talked about on your blog. You're really talking about protecting a person, the information that is connected to a person, as opposed to protecting a system or a network; and so, those different goals are just so. . .it seems nuanced, but they're actually vastly different things that you want to protect. So, you make a lot of sense in how security teams would be looking at things differently. I just know from conversations with security folks, especially hackers, sometimes, if they're not privacy- knowledgeable, they kind of conflate privacy with confidentiality anyway, which is an aim of. "Oyou have a breach, so therefore you have a privacy breach of data, and that's what trivacy hink privacy pthink p. Privacy - security for protecting from breaches.

Rebecca Balebako:To dive into that a little more, I think a privacy red team isn't necessarily going to try and attack your company's financials. It's going to try to attack the company's data that they have about people. Right? It's a different thing. It's not going to try and attack the organization's resources or proprietary information. It's going to be looking at the data about people.

Debra J Farber:Exactly! So, why should organizations invest in privacy red teams? I know it now sounds maybe a little obvious, but what are some of the benefits that can be realized?

Rebecca Balebako:Privacy red teams give you a really unique perspective of the entire thread of an attack. It can chain together a whole series of features working as intended, features not working as intended, to come up with something novel - information that you wouldn't necessarily get in another way. It's also going to be very realistic, furthermore, it's going to be ethical, or it should be ethical. I do want to say, though, there are lots of benefits to privacy red teams, but I don't think all organizations should invest in privacy red teams.

Debra J Farber:Okay, why is that?

Rebecca Balebako:I think privacy red teams are useful for organizations that already have a pretty mature privacy organization, and so there are some privacy maturity models you can look up online. If your system is still in the 'ad hoc' or you don't really have your complete privacy system defined, then privacy red teaming is too easy and too expensive. There's other low hanging fruit you can do first like get your privacy organization to be systematic, to be clear, to build in those protections first; and then, you should come back and test everything that you've put in place. I think privacy red teams give you this incredibly realistic understanding of a chain of events, but you do have to have a pretty mature privacy organization in order to make that test worthwhile and useful.

Debra J Farber:That makes a lot of sense. I think later on we'll talk about smaller sized companies and maybe what they can do. So, I don't want to lose that thread, but it makes sense that if you were immature in privacy and started a red team, what would your scope be and how would you even be able to take. . . if you don't even have a process to intake the results of the test and then fix them, then what's the point in doing it? I understand what you're saying. You need a certain level of maturity.

Rebecca Balebako:One of the other benefits of privacy red team is that you're testing your incident response. If you don't have an incident response team, there's no blue team for the red team to test against, which, honestly, I think that leads us into the natural question of what is a red team?

Debra J Farber:and what is the blue team? What does the term come from?

Rebecca Balebako:We are borrowing it from security and I largely use privacy red teams based on the security world. But even before that, it was a military term where a military would have a red team. So, people within that military pretend to be the enemy and pretend to attack their soldiers. Red team would be the pretend bad guys and the blue team would be the good guys, and it gives you a more realistic way of testing how does your blue team respond when it's being attacked. That's largely where the term red team comes from. This idea of having an incident response team as your blue team is a really important part of the concept and the realistic attack, the adversary; it's all very important part based on this historical meaning and use of the term.

Debra J Farber:I read that it's 'red team' because it was in relation to - during the Cold War era - in relation to Russia. Have you heard that?

Rebecca Balebako:I have heard that.

Debra J Farber:Is that true or is it just folklore?

Rebecca Balebako:I don't have the sources on that, so it could be true. It sounds true, but I don't know.

Debra J Farber:Okay, well, just putting that thought out there to others - that's an interesting factoid, it may or may not be true. So, what exactly do privacy red teamers do? Obviously, we talked about the buckets of things, the outcomes we want from them. But, what are the types of approaches they might take, or some examples of what they actually might be coding up or doing?

Rebecca Balebako:There are three things. There's three steps to a privacy red team attack, and it's 1) prepare, 2) attack, and 3) communicate; the attack part in the middle gets the most hype. It sounds the most exciting and sexy and like, "oh, you're going to code up a way to get into the system or you're going to re identify the data that's already been supposedly de identified. Those are the types of attacks you can do, but actually that's the smallest part of a privacy red team exercise.

Rebecca Balebako:First, you have to prepare, and so, that's defining the scope. Which adversary are you going to model? What are their motivations? What can they actually do? And, you cannot leave that scope.

Rebecca Balebako:And then, you have to think through "how can we do this attack ethically, because it could be real users. Are you actually going to create, like, a different database with a synthetic set of users to run the attack against? Like, what are you going to do to make sure that this attack provides the most benefit while causing the least harm? That's all in the preparation and that takes time. Then you run your attack and it could be like pretending to be an insider and re identifying a database. It could be a bunch of different things.

Rebecca Balebako:But then, you have to communicate what the attack actually found, and you have to do this in a very clear way so that anyone reading it understands why they should care; this can be hard. The communication has to be at both the very detailed level of like "Here are the steps we took that created this chain where we were successful or not successful, and here is why you should care. This is why it's actually bad, and that's sort of the high level putting it all together so that the leader of the organization understands why they should fix all these things Like what really is going on here.

Debra J Farber:What I'm hearing you say is that, besides having some technical skills, red teamers really need to be excellent communicators.

Rebecca Balebako:Absolutely, or someone on the team should be a very good communicator, but actually, at the same time, red team is team. There is a team in 'red team,' and so one of the things while you're doing the attack is you're likely to meet your team every day and tell them what you worked on that day, what worked and what didn't work, and plan the next day's work. So, you do need to be able to summarize to your team and communicate what you've been doing. It's not really work that speaks for itself. So, as opposed to software engineering, where you write your code, it passes the test, people can look at it and they're like, "Oh yeah, you did great work With red teaming, you really have to be able to communicate it.

Debra J Farber:Yeah, because the decisions will be made, it sounds like, based on that communication.

Rebecca Balebako:Yeah, how convincing you are.

Debra J Farber:Right. Well then, that brings me up to the next question, which I'd love for you to unpack this for us, how leveraging a red team is different from other privacy tests like conducting a vulnerability analysis, for instance, or managing a bug bounty program, where I know it's also important to have good communication skills.

Rebecca Balebako:Yeah, absolutely. I think the reason we're focusing on red teaming is because it's kind of a hot term right now and people are wondering what it is and some companies are hiring for it; but it doesn't necessarily mean that a privacy red team is the right thing for your organization. A vulnerability analysis is also a really great way to do privacy testing. What a vulnerability analysis will do is perhaps look at an entire product or feature and scan through all the potential things that could be wrong from a privacy perspective.

Rebecca Balebako:Imagine there's like a whole bunch of doors and a vulnerability analysis. You're going to go try to open each one of them and then at the end you're going to have a list of all the doors that could be opened. Whereas in a privacy red team attack, as soon as you can open a door and get in, you're going to use it and to continue with the attack to get to your goal. So, you might not necessarily do the same scan of trying to open all the doors. You might notice, "Hey, that door looked like it would probably be easy to open, but we didn't actually test it. It's a little bit different in terms of scope.

Debra J Farber:I might even ask you this question earlier on then anticipated, but based on your response there, that brings up bug bounty programs and whether or not you think bug bounties are going to be a thing in the future. Previously, I've been excited because I thought that this could be a real opportunity. Successes in security show that you can crowdsource for finding bugs in software and only pay on performance, and so it's been really great for security teams to gain hacking resources outside of their organizations and only pay as there's been bugs that are found. But, I just heard you say is that you might have to keep going further in scope to see if the door is open. You want to still go further. That to me sounds like you would need to have internal people who have permission to do that, and that you might want to keep that within your organization and not have external hackers try to find privacy or penetrate that deeply.

Rebecca Balebako:I think it's going to really depend on your organization and their goals. I think there are some hurdles in the privacy community. Some problems that we. . .no, not problems, opportunities for us to solve before we're going to see successful privacy bug bounties. I think largely it's because. . . privacy bug bounties, as you said, they seem great. You can just crowdsource it. You only have to pay when someone successfully finds a vulnerability. You don't have to have a full red team coming into your company and penetrating it. It does have a lot of problems, but bug bounties are unexpectedly expensive for privacy because you need to evaluate any bugs that come in because each bug is documented risk. It's telling you about a potential problem.

Rebecca Balebako:If you do not have a quick way to assess how bad that vulnerability is, you could spend a lot of resources going back and forth and trying to figure out whether this should be fixed or not. So I'm just going to give a. . . I am going to make up an example of a company or a feature. Let's say you are a photo sharing app and you're like y"ou know what, we delete photos after seven days, so we protect your privacy, don't worry, it's just like temporary photos. And then you get a report that's like you know what? My photo is still here, so you didn't delete it in seven days.

Rebecca Balebako:And then the company has to go look at it and they're like "well, actually you saved it to your favorites, and so when you do that, we assume that you want it retained for longer. And the user's like well, I know I can delete it myself if I save it." Then you start this back and forth of like "was it a communication thing? Was it a feature thing? It can be really hard for something like that. Like the company built the feature assuming that, like, if you save it to your favorites, then you don't want it automatically deleted, the customer assumed that it was going to be automatically deleted. It's not really clear whether the company should sort of like jump up and stop all its other product work and fix that privacy issue, or how to fix it.

Rebecca Balebako:So, the security community has a security vulnerability scoring system and people argue about it. They don't all love it, but at least they have it and the community has more or less agreed on it. We don't really have a privacy bug vulnerability score and so that's an opportunity for the community to get together and develop some standards around that. Because once we have that, then companies can more quickly, if they were to have a privacy bug bounty, they could more quickly use these standards that are agreed upon, assess like how bad is this vulnerability, and then make a decision. Otherwise, they're just sitting on risk and it takes a lot of work to say whether they're going to work on it or not.

Debra J Farber:You don't even know the size of it, because how do you assess the size of the risk? So, that that makes it tough. What work needs to happen in the industry or within companies in order for bug bounties to be realistic then? Is it just having standards? That would be. . . obviously that'd be a huge. I don't want to say 'just;' obviously that's a huge . But, but would that be the hurdle, or are there some others?

Rebecca Balebako:There's some other hurdles because privacy has grown a lot from legal. [Debra: It has. It's true.]

Rebecca Balebako:And it sometimes has the - how do I put this? You're the lawyer - the risk averse nature to documenting potential problems; whereas the security community, the engineering community, is much more like write down the problem, document it, and then we can decide where to fix it. I think when we're sort of saying, "don't write down the problems, I don't even want to know the problems, if that's the culture that exists, I'm not trying to blame employers, right, but it's just a different culture. It's a fine culture, but it doesn't really work for a privacy bug bounty.

Debra J Farber:It's a risk-based culture that doesn't scale well to engineering.

Rebecca Balebako:It's just a different culture and if that's where your organization is, it's going to be really hard to overcome those antibodies to make a privacy bug bounty effective.

Debra J Farber:That's fascinating. You've given me a lot to think about, but I think that makes a lot of sense. Okay, let's get away from bug bounties for a little bit and get back to red teaming. What are some examples of breaches that might have been prevented by adversarial privacy testing, either in terms of red teaming, or vulnerability scans, or privacy- related, obviously.

Rebecca Balebako:I think anytime you see something in the news that seems like a privacy leak, but the company is actually saying, "h, we haven't detected a security data breach, then it's probably something where a privacy red team would have identified it.

Debra J Farber:I have an example, I think. Have you heard about the recent 23andMe security incident where they found that a list of 1 million Ashkenazi Jews, and I think also a list of - it was a credential stuffing attack - a list of Ashkenazi Jews and a list of Chinese users, I believe, and all of their connected trees and relatives if you've shared with other people. They were able to, in this credential stuffing attack, get a list of all those users, their email addresses or whatnot. But then, 23andMe was like, "Oh well, an incident was detected but, whatever, it wasn't us, you know we didn't suffer a breach and it was very focused on the legal definition of a breach.

Debra J Farber:I am an Ashkenazi Jew, so for me, and what's going on in the Middle East right now, this is actually kind of scary to know that, even though I wasn't affected in the attack itself, people I was connected to on 23andMe were; and therefore, I've been alerted by 23andMe that my info has been exposed. But, they're like "It's not our breach, we didn't do it, you might be affected. This was their communication. So, a privacy red team might have been able to surface a risk like that?

Rebecca Balebako:Before I talk about the technical aspects of that, I just want to say that particular incident makes me so sad. I mean, as you said, there's anti-Semitism, which is horrible. There are the events going on. I mean it's October 2023 for anyone who listens to this podcast later. Anyone who even saw the headlines that like, "oh, lists of different ethnicities are available, of Jewish people are available on the dark web, that's already scary. People feel scared. Yeah, Hitler would love a list like that. You know?] Yeah, and the emotions are there and 23andMe has kind of missed a boat on acknowledging that there's real fear here. So, I just want to acknowledge sort of that emotional aspect of it, and now I'm going to talk a little bit about sort of the technical aspects. Between you and me, let's call it a breach, even if they don't.

Debra J Farber:Exactly. It's a breach of trust. It's a breach that I have with 23andMe, after all the representations they've made over the years of how they keep genetic data separately from. . . that can't be breached because they keep it completely separately from your identity, and then blames the user. "h, change to strong passwords and don't reuse them on the web." Right, well, I did everything. Right, and I'm still being told. Like you know, I've been affected. And they wouldn't even mention that it was my Ashkenazi Jewish information that was compromised. They kept that out of the communication, too. It was only through news articles that I was able to put those things together, and so the comms was. . . I'm like really pissed at them because they've eroded trust with me over the years now. Right? So, a breach, breach

Rebecca Balebako:think one of the reasons a privacy red team may have caught this where potentially a security team may not, is it's this combination of the credential stuffing as well as using a feature as intended.

Rebecca Balebako:So, it has a find people with similar DNA to me. That's how people are finding about half brothers and sisters that they didn't know about it. This is like all sorts of interesting privacy implications of that feature, but that feature is working as intended. So, it's a combination with the credential stuffing. Right? So, I think anytime you have an organization that doesn't match their authorization and authentication strength to the sensitivity of the data, which is what you have here, right; like it's just a username and password and it's super sensitive information about vulnerable populations. It's super scary to see that it's on the dark web. There was a mismatch between that design in the company and I think they didn't think about it because, h"Hey, the feature is working as intended or, as you said, they're blaming the user, like oh, your password wasn't strong enough.

Debra J Farber:To that point, they made two-factor authentication optional, and I guess, in many ways, there might have been a discussion and go "oh well, let's give the user the option. This way they're not forced to go through a gating process that takes away from the design and makes it harder to use. Right, you want usable products, so don't give users too many hurdles. To log in perspective, like, let's give them the option if they want more security on their account, right, but you could see here how that, like, I'm just kind of almost a bystander here and I'm affected. So there was some more threat modeling that needed to be done for privacy and if they made that mandatory, perhaps that would have been a better control to have in place instead of leaving it to the user to assess the risk for themselves and determine, given how sensitive the data is, whether or not they want to use 2FA. I know I just kind of threw this example at you.

Rebecca Balebako:It's a real example. It's a good one. I mean good in terms of like highlights. But, it is so sad.

Debra J Farber:It makes me angry, obviously because I'm like affected, too; but, it makes me angry in that they didn't think that that feature. . . it seems to me that anything that's not the genetic data itself, like the actual genetic data, that they classified it as super sensitive or whatever and have done all of the infrastructure implementation on that, but that they just kind of like left the door wide open on certain other areas, like the Jewish people, for instance, being both an ethnicity and a religion, and just not thinking about it in those terms, where now our ethnicity has been added to a list somewhere on the dark web with all our data. So, you know that's scary.

Rebecca Balebako:Debra, actually, I had to look it up, when I heard about this breach, what Ashkenazi is. If you want to explain to listeners a little bit.

Debra J Farber:Oh yeah! Thank you for that because I am making assumptions that people know what I am talking about. Yeah, so the Jewish people. . . I think there's three types, maybe there's four, but there's three that I know of of ethnically, like in our mitochondrial DNA, going back to Jewish mitochondrial women, that are its own ethnic groups, that have actual DNA lineage that are different from other people. You could track the lineage of the different Jewish people based on this.

Debra J Farber:Ashkenazi Jews are the ones during the diaspora that kind of came into Europe and have lighter skin as a result of many years in Europe and maybe intermarriage and whatnot.

Debra J Farber:There's other groups that are more Middle Eastern and have always stayed there or have gone through Spain and darker features, and so that's Sephardic and Mizrahi and a lot of the Mizrahi Jews I believe live in Israel - I actually don't really know many because many still live in the Middle East. Then, I think there might be a fourth group that's smaller, that I don't know of. I think Ashkenazi is one of the largest groups. So, most of the Jews from Eastern Europe are sprawled around to all those countries were Ashkenazi. So am I. So, I know plenty of Persian Jews. They're typically Sephardic and just slightly different religious customs as well that have followed the fact that they have lived all across the world, and so regionally there's some cultural differences to these groups, but in terms of lineage you could trace each of these groups back to, like I said, long lineage of Jewish people over hundreds of years, thousands of years.

Rebecca Balebako:Yeah, thank you. Thank you for explaining that, because I think, if we maybe even take it up a level, anytime your database reveals groups of vulnerable populations - and I will include Ashkenazi Jews in those vulnerable populations because there is so much anti-Semitism, but it could also be like identifying black churches in the U. S., or whatever. Anytime your database lets you figure out and identify these people who are historically more likely to have violence or disproportionate harm. You have to start thinking about adversarial privacy. You have to think about motivated adversaries who are gonna try to cause harm to people and how can you protect them.

Debra J Farber:And then thinking of privacy harms. That's the other thing. You're not necessarily thinking about the confidentiality, integrity, and availability of systems here. Right? Instead, you're thinking about surveillance, will people feel we've invaded their privacy because we know too much about them or because all of the different Solove Privacy Harms should be thought about.

Rebecca Balebako:Absolutely.

Debra J Farber:So, it's just a different outcome that you're focused on. There's a growing trend that I see in the privacy engineering space, where big tech companies seem to be the first ones that are building and deploying privacy red teams, but they're also some of the most, let's say, notorious privacy violators in terms of fines they've had and maybe some of their past approaches. So, we're talking Meta, TikTok, Apple and Google all have privacy red teams, at least state that they're building in that space. So, does this mean that privacy red teams are ineffective if it's privacy violators that are using them, or is it more that they are starting a great trend and they have the resources and this is making their practices more effective?

Rebecca Balebako:I think we have to be very careful about the direction of causation here. It's probably because of those fines that many of these companies have more mature privacy organizations. As I mentioned earlier, if you're still at the ad hoc stages of your privacy organization, privacy red team probably isn't right for you. These companies that have faced regulatory scrutiny, they've been required to upgrade their systems. They're a more mature privacy organization and that's when an organization should start thinking about privacy red teams. It's not that privacy red teams don't work, but it's just that you need to be pretty mature, and a lot of companies become mature because of those fines and because of those regulations.

Debra J Farber:Awesome. Well, not awesome, but that was a helpful response. I was gonna ask you if there was a frugal way to set up a privacy red team in a smaller, mid-sized org; but, I guess for this purpose, let's also say not only is it small and mid-sized, but that it's got a mature privacy team. Maybe it's just not an enterprise. How would a company go about this if they don't have a lot of money, but they do have a mature privacy team that's smaller and mid-sized? How do you see that being implemented or what are some best practices to think about?

Rebecca Balebako:There's a lot of other privacy testing that can come before privacy red teaming and add a lot of value.

Rebecca Balebako:If you have all that in place and you know you want to do privacy red teaming because you know there's adversaries out there and they're motivated to attack your data, one thing you can do is train some of your security folks in privacy. Then, if they can push some chunk of their time into privacy and if they can get that privacy mindset, that's a more affordable way to start something like adversarial privacy testing. I think other types of privacy tests I would recommend first. . . so, for example, I know Privado is the sponsor of this podcast and they have the static analysis of your code, and they can give you results. That's just like the kind of constant monitoring and privacy testing that's not adversarial, but might be a really good first step. It might actually be cheaper than implementing a privacy red team. [Debra: That makes sense too]. If you know you need a privacy red team, but you don't have a lot of cash, then maybe think about training some security folks. Or maybe, if you have some privacy engineers, think about training them for adversarial privacy.

Debra J Farber:That's actually brings up a really good point. Do you see privacy red teaming growing from upskilling security engineers or security red teamers more so, or taking technical privacy folks, privacy engineers and turning them into red teamers. Or, do you think it's a combination of both?

Rebecca Balebako:I think it's gonna be a combination of both and the teams should have a mix of skills. Also, I think there are way more red teaming and pen testing external consultants than there are companies that have that all in-house. You don't need to grow it within your org; you can hire it externally and then you can just have it done once a year, twice a year, you know, as opposed to like the continually staffing a privacy red team that makes sense.

Rebecca Balebako:I do see it potentially growing more for sort of contract, part-time, external consultants, obviously who sign an NDA and obviously who work only in the scope, before I see a lot of in-house teams.

Debra J Farber:That is interesting. Thank you. And then, for Engineering Managers who are seeking to set up a privacy red team for the first time, what advice do you have for them to get started?

Rebecca Balebako:When I talked about what is the actual work in a privacy red team, I mentioned that the first step is preparing. Even just to set up a red team, the first step is preparing, and you really need to have leadership engaged. You really need to make sure that if your privacy red team finds something, that you have total leadership buy-in and they're gonna make sure it gets fixed. I think that the politics and the funding of it are so crucial to a successful privacy red team. Then, when it comes to hiring, I would say, look for a mix of privacy and security folks and I also would include usable privacy or people who have some experience thinking about the usability perspective, the user side of things. If a user gets a notice or if they see a certain setting, are they gonna understand it or use it in a certain way? And that can be part of privacy red teaming.

Debra J Farber:Oh, fascinating. What resources do you recommend for those who want to learn more about privacy red teaming?

Rebecca Balebako:Well, thanks for asking. I put together a list of red team articles, guides, and links to courses on adversarial privacy testing on my website. There's a special page just for listeners of this podcast.

Debra J Farber:I will definitely put that in the show notes, but I'll also call it out here. It's www. privacy engineer. ch/shiftleft.

Rebecca Balebako:Exactly so - 'ch' is the domain for Switzerland. I'm based in Switzerland, so that's why it's privacy engineer. ch and 'shift left' as a reference to the podcast and as a way to thank all the listeners for sticking around with us.

Debra J Farber:Thank you so much for doing that. I think it's such a great resource and I hope people make use of the resources there. Any last words before we close for today?

Rebecca Balebako:It's been a real delight. Thank you so much for having me. I love your podcast. There's so many interesting people to listen to, so thank you.

Debra J Farber:Well, thank you, and thank you for adding to the many interesting people to listen to, because I think you're one of them. I'd be delighted to have you back in the future to talk about the developments in this space, and I'll be watching your work. You're definitely one of the leaders in my LinkedIn network, at least in privacy red teaming and privacy adversarial testing, and so now, I hope people engage you from listening to this conversation, since you have your own consulting firm. If you have any questions about privacy red teaming, reach out to Rebecca. So, Rebecca, thank you so much for joining us today on The Shifting Privacy Left Podcast to talk about red teaming and adversarial privacy testing. Until next Tuesday, everyone, when we'll be back with engaging content and another great guest.

Debra J Farber:Thanks for joining us this week on Shifting Privacy Left. Make sure to visit our website, shifting privacy left. com, where you can subscribe to updates so you'll never miss a show. While you're at it, if you found this episode valuable, go ahead and share it with a friend; and if you're an engineer who cares passionately about privacy, check out Privado: the developer- friendly privacy platform and sponsor of the show. To learn more, go to privado. ai. Be sure to tune in next Tuesday for a new episode. Bye for now.

Podcasts we love

Check out these other fine podcasts recommended by us, not an algorithm.

The AI Fundamentalists

Dr. Andrew Clark & Sid Mangalik

She Said Privacy/He Said Security

Jodi and Justin Daniels

PET Shorts & Data Sorts

Jeffrey Dobin

Privacy Abbreviated

BBB National Programs